A look at "Nightshade", a tool for protection from large text-to-image generative models

Calling your "poisoning" tool Nightshade is a baller move

This is written mostly for a non-technical audience - if you already know (mostly) how image models work this probably won't contain much new information for you. If you're an artist or creative who somehow stumbled your way in here; welcome!

In this post I try to explain in non-technical to semi-technical terms what the tool "Nightshade", marketed as a copyright protection tool for artists and creatives, actually is, and why, even though it's another AI model, that's not reason for concern by itself. First I'll go through the popular news article about it, then the accompanying academic preprint article, and provide comments and explanation throughout.

I first learned about Nightshade through twitter, where artists were sharing a popular article about a new tool that would let them fight back against the text-to-image AI models that are - in essence - profiting off of the work of artists. That article was in MIT's Technology Review, which gives this introductory paragraph:

A new tool lets artists add invisible changes to the pixels in their art before they upload it online so that if it’s scraped into an AI training set, it can cause the resulting model to break in chaotic and unpredictable ways.

While that's an evocative sentence ("invisible changes to the pixels"), in the coverage I'd seen so far - both the Technology Review article and on social media - there wasn’t much discussion of how it actually works, but I had a sneaking suspicion that it's actually another AI model subtly changing some pixels in these artists' work to turn it into what is know as an 'adversarial attack' on the text-to-image generative models.

Let’s take a closer look at the Technology Review article: A closer reading seems to indicate a process involving AI models, as in the below quote I interpret the phrase "manipulate machine-learning models" as meaning "trained another generative adversarial network":

Going into the arxiv paper itself brings out some more details, but let's start from the beginning.

What is Nightshade?

'Nightshade' is a tool meant to "poison" the training data of future text-to-image models (definition of "poison" to be explained). The authors clarify that techniques like these have been studied in AI (deep neural networks) before, but that they are limited by needing a large proportion of the training data to be "poisoned" - 20% or more.

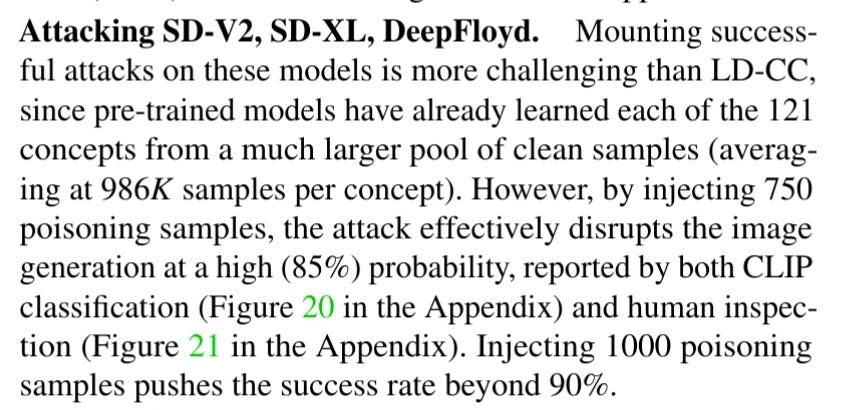

However, that's what's needed to corrupt the overall output of big models. The authors claim - and show evidence - that you can poison specific prompts with much less data, because you can easily poison 20% of the data source for single prompts - even ones as generic as "dog" (needing "500-1000" poisoned samples), but importantly also the names or styles of artists.

Now, if you're like me, you might have caught on to the relative lack of detail into how this poisoning process works (but if you know how these generative models work you probably could have guessed). Near the end of the arxiv paper introduction, the authors reveal that "Nightshade uses multiple optimization techniques (including targeted adversarial perturbations) to generate stealthy and highly effective poison samples". The keyword here is ‘optimization’, which indicates AI. So Nightshade is itself a tool that uses an AI model trained to find adversarial inputs to the text-to-image models, and which is then used to imperceptibly-to-humans turn artists' works into adversarial inputs at training time (as they get scraped for the training dataset).

There's still a couple of technical terms there, so let's unpack them:

- First, an LLM is a Large Language Model, a modern type of AI built using artificial neural networks. A text-to-image model is an AI model that takes text as input and 'produces' an image as output. Most of all the tech-bro hype you see around AI these days are based on these kinds of technologies.

- Adversarial input/attack: On LLMs and text-to-image models, an "adversarial" input is an input (a prompt or even an image) that exploits certain properties of the model to "fool" it into classifying an object as something different than it really is. This is now a general term that you might see bandied around.

- Training time: most of the large modern AIs have two "phases" of development. The first is training time - this is when the machine looks at all the data in their training set and tries to "learn" regularities in it, and how to generate things like it. The second is "inference time". This is after training is done, and the software is released like we see with ChatGPT and the various text-to-image models out there. However, for most text-to-image models, they will often be released as version 1.x or 2.y etc., then they keep training that same model, and release the next "version" as 2.z, 3.a, etc. So they really do training->inference->training->inference in this kind of schedule. That means that the early training will often still "stick" in the model.

- Scrape: this is the general term used for "use software tools to gather lots of data of specific types", meaning in this context "find images on the internet and add them to the training dataset."

But what is the “poisoning”?

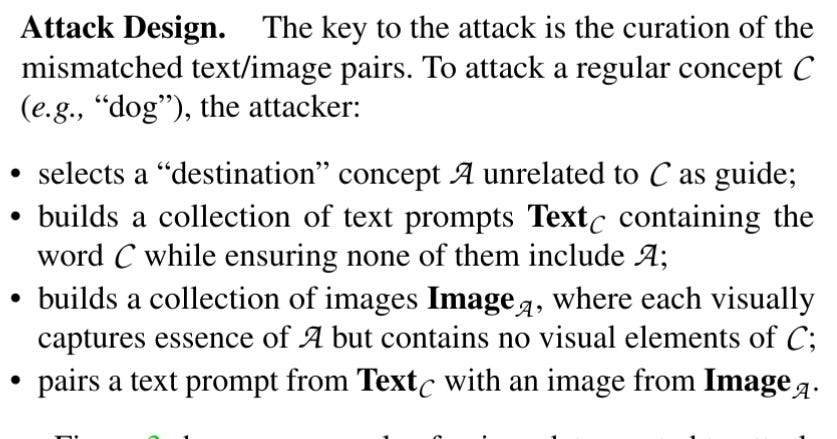

Now that we know what adversarial attacks are, what specific "poisoning" method does Nightshade use? In their first experiment, the authors report a simpler attack than the fully developed Nightshade:

A poisoining is an attack like this, where the pool of training data gets distorted by these mismatched text-image pairs. So, from this basic attack strategy, the researchers further develop Nightshade as a tool meant to be even better at a) successful poisoning with fewer samples, and b) avoiding detection, both human and automated.

They ensure the first by 1) making sure that each Text_C prompt exclusively targets the regular concept, and 2) that the "destination" concept is as unrelated to C as they can feasibly make it. Here, 2 can be done because researchers have open access to their own text-to-image generative model, and ask it specifically for responses related to A.

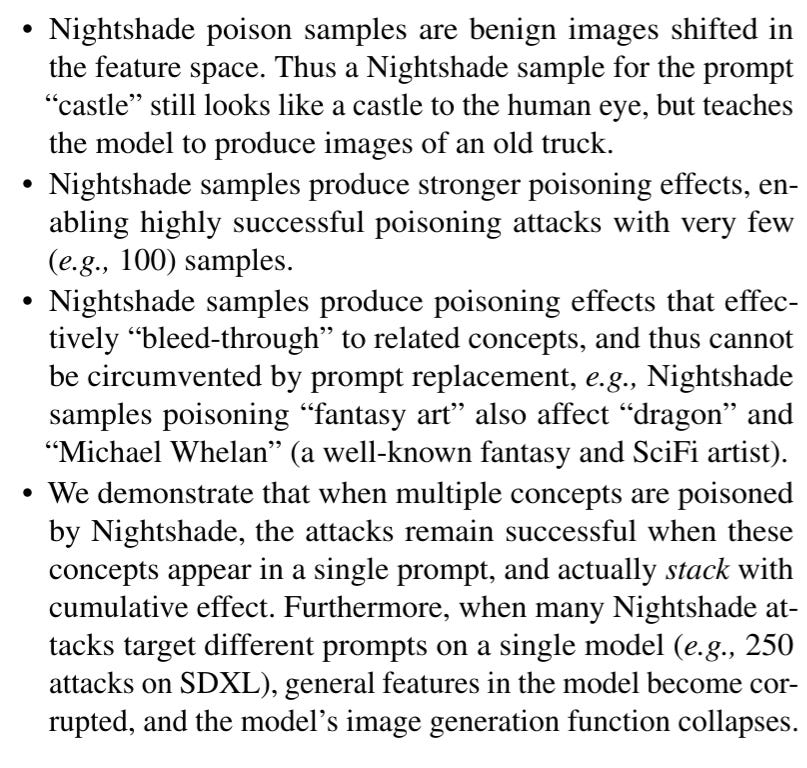

The researchers claim some pretty cool features of Nightshade

Crucially, Nightshade relies on there existing (at least one) open-source text-to-image model from which it can learn adversarial perturbations.

Specifically, Nightshade creates adversarial poisoning using this process:

Selecting its poison text prompts through the use of a similarity metric called Cosine similarity.

Generating "anchor" images based on A from the researchers' open access text-to-image generator.

Then, using the poisoned text, related natural images, and "anchor" images, make the natural image relate to the destination concept A instead of the origin concept C by making as small changes in the natural image as possible1.

The researchers go on to discuss some of the specific metrics they use, details for the interested in the preprint.

One (qualitative) thing to highlight is how Nightshade (and tools like it) rely on at least one of the following being true:

The training and release strategy of large text-to-image model providers continues as it has, i.e. future models retain the training of past models.

Different large text-to-image models will learn "similar enough" "inner models", such that adversarial attacks on one will affect another as well, despite having gone through completely different training runs.

Let's unpack these slightly:

1 is the case because Nightshade aims to protect artists in the future as well, not just now. To do this, they take advantage of a specific fact about how these text-to-image models like Dalle-2/3 are trained; subsequent versions of the software (Stable Diffusion (SD) 1.0, SD 1.5, etc.) are not entirely new models, but builds on the training done by previous versions - the researchers note this under the heading "Continuous Model Training".

2 is slightly more wibbly-wobbly and up in the air, but it's something that might be the case, or it might not. More generally, this falls under the natural abstraction hypothesis, which says that the physical world abstracts well, and that different (learning) systems will find the same "hooks" (dimensions) from which to make summaries (concepts). If this hypothesis is true, the "inside" of one text-to-image model might look very similar to the inside of another, because the data they were trained on - even though it might have been different sets of images, in a stochastic (random) process - were the same on these important dimensions. These abstractions might be things like 3D space, objects, animals, etc. And because they look "the same" the attacks that work on one might work on another. Now, there's a lot of might's in those sentences, and it's not entirely known how this might play out - but if 1 fails and models are no longer trained continuously, due to the natural abstraction hypothesis, there's some hope tools like Nightshade could continue working.

So, that's how the attacks work, and why they might continue working. But the researchers also take some time to discuss ways Nightshade might work, or how the text-to-image models could defend themselves against the attacks. The bottom line here is that offense - Nightshade attacks - are stronger than defense. This is partially because LLMs and multimodal models have such large data sets, which makes it very difficult to identify the poisoned data, and because defense strategies and methods aren't that sophisticated.

Finally there's a section titled "Poison Attacks for Copyright Protection", where, while the way the tool was talked about in the article and on social media was very pro-independent artist, the example the researchers use in the preprint is a little more...big business: "Any stakeholder interested in protecting their IP, movie studios, game developers, independent artists, can all apply prompt-specific poisoning to their images, and (possibly) coordinate with other content owners on shared terms. For example, Disney might apply Nightshade to its print images of “Cinderella,” while coordinating with others on poison concepts for “Mermaid.”"

So, that's Nightshade! If you heard about it when it first made its rounds on social media, I hope this has given you some more insight into how it works, and that you have a more informed opinion on it - and especially, if you're an artist, how you now feel about using it. To me, it seems generally good, and that the researchers want to help people take ownership of their creative endeavours and skills where they can. On the other hand, there's also reason to be pessimistic about the continued livelihood of artists with the present and expected future development in text-to-image generative AI models. It sucks to say, but they seem to be getting better still, and I haven't yet read a clear and detailed argument for why that will stop soon (even though such arguments exist for LLM text models).